A Java application runs by default in one process.

Within a Java application, we can use multi-threads to achieve parallel processing or asynchronous behavior.

What You Need

- About 7 minutes

- A favorite text editor or IDE

- Java 8 or later

1. Why Multi-Threading

As we all know, the speed of CPU, memory, and I/O devices are very different.

In order to make reasonable use of the high performance of CPU and balance the speed difference of memory and I/O devices, the computer architecture, operating system, and program compiler have all made contributions :

- CPU adds the usage of cache to equalize speed difference with memory;

- Operating system adds the usage of processes and threads to multiplex the CPU in time-sharing, thereby balancing the speed difference with I/O devices;

- Program compiler optimizes the execution order of program instructions, so that the cache can be used more reasonably.

On the other hand, all above contributions generate problems for concurrent programming :

- Visibility Problem;

- Atomicity Problem;

- Orderness Problem.

2. Problems With Multi-Threading

If multiple threads access a same shared data without synchronizing operations, the results of the operations may be inconsistent.

2.1 Visibility – CPU Cache Caused

Visibility means that modifications to a shared data by one thread can be seen immediately by another thread.

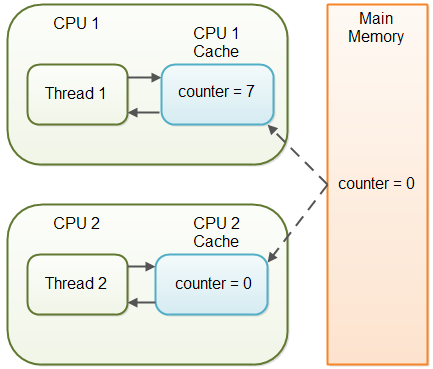

In a multi-threaded application, if the computer contains more than one CPU, for performance reasons, each thread may copy data from main memory into a CPU cache while working on them.

There are no guarantees about when the JVM reads data from main memory into CPU caches, or writes data from CPU caches to main memory.

So imagine that thread 1 writes data (0 by default) to 7 then thread 2 reads data, the thread 2 should have data value = 7.

But there is a moment that thread 1 set data value to 7 in its CPU cache but not yet write back to main memory, at the same time thread 2 reads data from main memory :

In this way, there is a problem because thread 2 has finally data value = 0 instead of 7.

2.2 Atomicity – Caused By CPU Time Multiplexing

Atomicity means that an operation or multiple operations are either all executed in one shot without interruption of any factor, or none of them are executed.

The following example demonstrates that multi-threads perform an auto-increment operation at the same time, and the final value may be less than 1000 after the operation is completed.

import java.util.concurrent.CountDownLatch;

import java.util.concurrent.ExecutorService;

import java.util.concurrent.Executors;

public class ThreadUnsafeExample {

public static void main(String[] args) throws InterruptedException {

int count = 1000;

CountDownLatch cdl = new CountDownLatch(count);

ExecutorService es = Executors.newCachedThreadPool();

Counter counter = new Counter();

try {

for (int i = 0; i < count; i++) {

es.execute(() -> {

counter.add();

cdl.countDown();

});

}

} finally {

es.shutdown();

}

cdl.await();

System.out.println(counter.get());

}

private static class Counter {

private int count = 0;

void add() {

this.count++;

}

int get() {

return this.count;

}

}

}

Below is the output of above code snippet :

994

The statement this.count++ requires three CPU instructions :

- Read value of count from memory to CPU register;

- Perform count + 1 operation in CPU register;

- Write the final value of count to memory.

Due to the existence of CPU time-sharing (thread switching), after thread 1 executes the first instruction, it switches to thread 2 for execution.

If thread 2 executes these three instructions, the switch will cause thread 1 to execute the following two instructions based on the value of count before being changed by thread 2, which will cause the last value of count less than it should have been.

2.3 Orderness – Caused By Instructions Reordering

Orderness means that the execution order of the application is executed in the order of the code.

Below is an example of problem causing by instructions reordering.

It defines four static variables x, y, a, b, and sets them to 0 at the start of each loop iteration.

Then use two threads, the first thread executes a=1 and x=b, the second thread executes b=1 and y=a.

public class OrdernessProblemExample {

private static int x, y, a, b;

public static void main(String[] args) throws InterruptedException {

int i = 0;

while (true) {

x = 0;

y = 0;

a = 0;

b = 0;

Thread t1 = new Thread(() -> {

a = 1;

x = b;

});

Thread t2 = new Thread(() -> {

b = 1;

y = a;

});

t1.start();

t2.start();

t1.join();

t2.join();

System.out.println("Round " + (++i) + " : x = " + x + " y = " + y);

if (x == 0 && y == 0) {

break;

}

}

}

}

Below is the output of above code snippet :

Round 3300 : x = 0 y = 1

Round 3301 : x = 0 y = 1

Round 3302 : x = 0 y = 1

Round 3303 : x = 0 y = 1

Round 3304 : x = 0 y = 1

Round 3305 : x = 0 y = 1

Round 3306 : x = 0 y = 1

Round 3307 : x = 0 y = 1

Round 3308 : x = 0 y = 1

Round 3309 : x = 0 y = 1

Round 3310 : x = 0 y = 1

Round 3311 : x = 0 y = 1

Round 3312 : x = 0 y = 1

Round 3313 : x = 0 y = 1

Round 3314 : x = 0 y = 1

Round 3315 : x = 0 y = 1

Round 3316 : x = 0 y = 1

Round 3317 : x = 0 y = 1

Round 3318 : x = 0 y = 0

Logically, above program should have 3 possible results :

- When the first thread executes to a=1, the second thread executes to b=1, finally x=1, y=1;

- When the first thread finishes executing, the second thread has just started, finally x=0, y=1;

- When the second thread finishes executing, the first thread starts, finally x=1, y=0.

In theory, it is impossible for x=0 and y=0, but it is not the case.

This is because the instructions are reordered, x=b is executed before a=1, and y=a is executed before b=1.

From compiler’s point of view, in the first thread, a=1 and x=b has no dependency, so in some circumstances, it is possible to reorder the instructions for the optimization of program’s performance.

For b=1 and y=a in the second thread, it is the same reason.

3. Thread Safety Solutions In Java

Thread safety is a computer programming concept applicable to multi-threaded code.

Thread-safe code handles shared data in a way that ensures all threads operate correctly and meet their design specifications without unintended interference.

In java, there are various strategies for making thread safe code.

3.1 Synchronization (Mutual Exclusion)

Access to shared data is serialized using mechanisms that ensure only one thread reads or writes to the shared data at any time.

Java provides two mechanisms to control the mutually exclusive access of multiple threads to shared data :

- Synchronized keyword implemented by JVM;

- ReentrantLock class implemented by JDK.

3.2 Non-Blocking Synchronization

Mutual exclusion synchronization is a pessimistic concurrency strategy, always thinking that as long as the correct synchronization measures are not done, there will definitely be problems.

The main problem of mutual exclusion synchronization is the performance problem caused by thread blocking and wake-up, so this kind of synchronization is also called blocking synchronization.

Regardless of whether there is competition for shared data, it has to perform operations such as locking, user-mode core-mode conversion, maintaining lock counters, and checking whether there are blocked threads that need to be woken up.

Non-blocking synchronization is an optimistic concurrency strategy based on conflict detection.

Do the operation first, if there are no other threads contending for the shared data, the operation succeeds, otherwise take compensatory measures (retrying continuously until it succeeds).

This kind of optimistic concurrency strategy do not require threads to be blocked, so it is called non-blocking synchronization.

Java provides below mechanisms for implementation of non-blocking synchronization :

- CAS (Compare And Swap);

- Atomic Classes.

3.3 No Synchronization

Synchronization is not necessary to ensure thread safety.

If a method does not involve shared data, it naturally does not need any synchronization.

Java provides below mechanisms for implementation of no synchronization :

- Stack Closure;

- Thread Local Storage.